Ensemble Learning

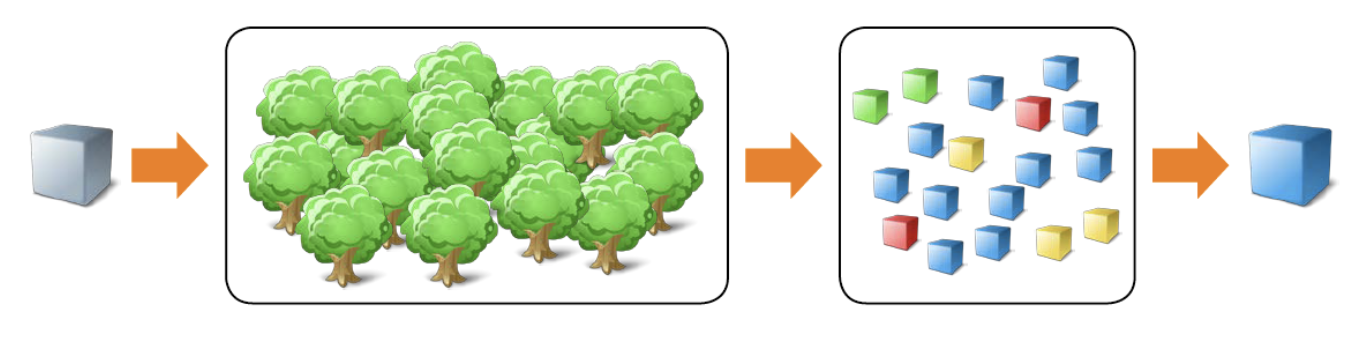

Some machine learning methods are considered weak learners, meaning that they are highly sensitive to the data used to train them.

For example, decision trees are weak learners; two slightly different sets of training data can produce two completely different trees and, consequently, different predictions.

However, this weakness can be harnessed as a strength by creating an ensemble of several trees (or, following the analogous naming, a forest).

Fitting Ensemble Models

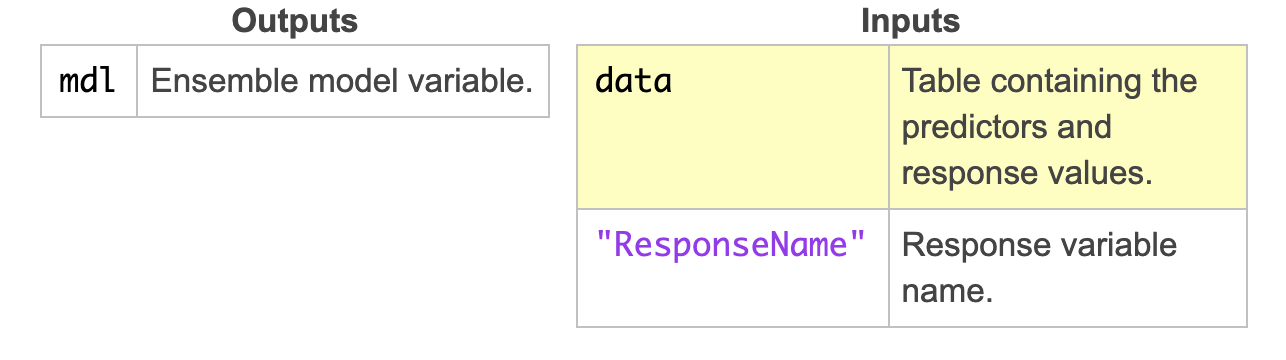

The fitcensemble function creates a classification ensemble of weak learners. Similarly, the fitrensemble function creates a regression ensemble. Both functions have identical syntax.

mdl = fitcensemble(data,"ResponseName")

Commonly Used Options

"Method" - Bagging (bootstrap aggregation) and boosting are the two most common approaches used in ensemble modeling. The fitcensemble function provides several bagging and boosting methods. For example, use the "Bag" method to create a random forest.

The default method depends on if it is a binary or multiclass classification problem, as well as the type of learners in the ensemble.

mdl - fitcensemble(data,"Y","Method","Bag")

"Learners" - You can specify the type of weak-learner to use in the ensemble: "tree", "discriminant", or "knn". The default learner type depends on the method specified: the method "Subspace" has default learner "knn", and all other methods have default learner "tree".

mdl = fitcensemble(data,"Y","Learners","knn")

The fitcensemble function uses the default settings for each learner type. To customize learner properties, use a weak-learner template.

mdl = fitcensemble(data,"Y","Learners",templateKNN("NumNeighbors",3))

You can use a cell vector of learners to create an ensemble composed of more than one type of learner. For example, an ensemble could consist of two types of kNN learners.

"NumLearningCycles" - At every learning cycle, one weak learner is trained for each learner specified in "Learners". The default number of learning cycles is 100. If "Learners" contains only one learner (as is usually the case), then by default 100 learners are trained. If "Learners" contains two learners, then by default 200 learners are trained (two learners per learning cycle).

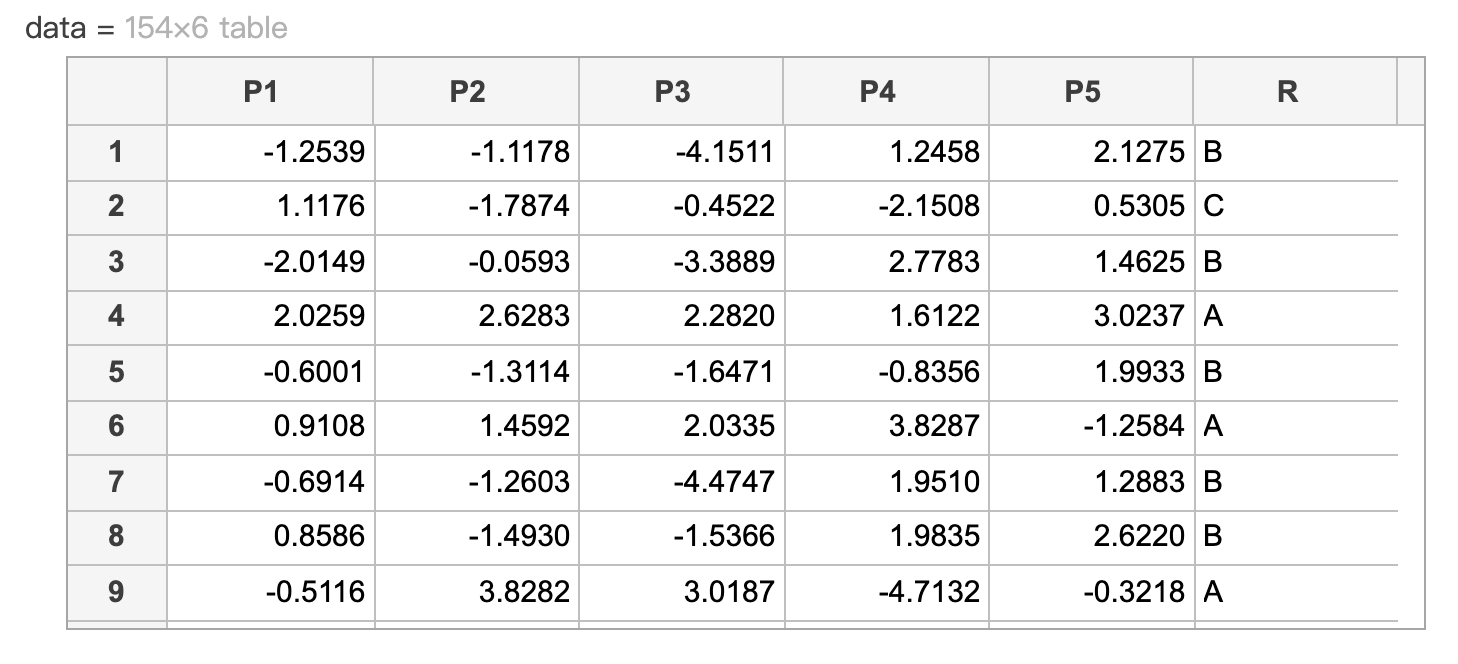

Fit an Ensemble

如下表資料,進行隨機森林

This code fits a classification tree and calculates the loss.

cvpt = cvpartition(data.R,"KFold",3);

mdlTree = fitctree(data,"R","CVPartition",cvpt);

lossTree = kfoldLoss(mdlTree)

Create another ensemble of bagged trees named 'mdlEns2'. Use 30 learners and the cross-validation partition 'cvpt'. Calculate the k-fold loss and name it 'lossEns2'.

mdlEns2 = fitcensemble(data,"R","Method","Bag",... "NumLearningCycles",30,"CVPartition",cvpt);

lossEns2 = kfoldLoss(mdlEns2)

其結果:lossTree = 0.0714 & lossEns2 = 0.0455

Use Learner Templates

This code partitions the data, fits a k-NN model and calculates the loss.

cvpt = cvpartition(data.R,"KFold",3);

mdlKNN = fitcknn(data,"R","NumNeighbors",5,... "DistanceWeight","inverse","CVPartition",cvpt);

lossKNN = kfoldLoss(mdlKNN)

Create a template kNN learner knnmodel

Then create another ensemble named mdlEns2 using the template kNN learners and the cross-validation partition cvpt. Calculate the k-fold loss and name it lossEns2.

knnmodel = templateKNN("NumNeighbors",5,"DistanceWeight","inverse");

mdlEns2 = fitcensemble(data,"R","Learners",knnmodel, "CVPartition",cvpt);

lossEns2 = kfoldLoss(mdlEns2)

其結果:lossKNN = 0.049 & lossEns2 = 0.1104

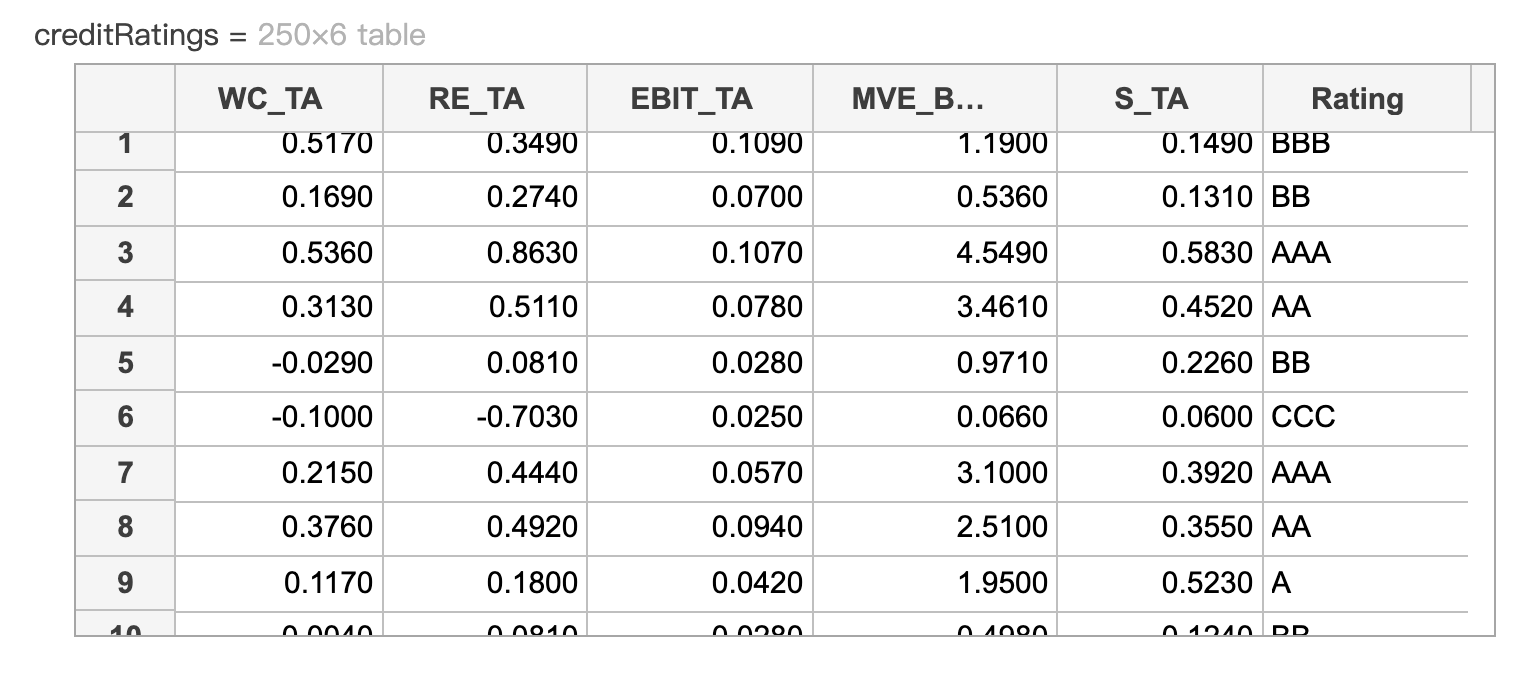

範例

This code loads and displays the credit ratings data and a 7-fold cross-validated tree model of creditRatings.

rng(0)

load creditData

creditRatings

mdlFull

fullLoss = kfoldLoss(mdlFull)

Fit model with 3 or fewer predictors.

[pcs,scrs,~,~,pexp] = pca(creditRatings{:,1:end-1});

% Create tree model to reduced data

mdl = fitctree(scrs(:,1:3),creditRatings.Rating,"KFold",7);

mdlLoss = kfoldLoss(mdl)

mdlLoss = 0.3360

Fit ensemble with 3 or fewer predictors.

mdlEns = fitcensemble(scrs(:,1),creditRatings.Rating,"Method","Bag",... "NumLearningCycles",50,"Learners","tree","KFold",7);

lossEns = kfoldLoss(mdlEns)

lossEns = 3680